Scenarios for AI adoption

The gradual permeation of artificial intelligence technologies into human life is more likely than the immediate onset of a radical new era.

In a nutshell

- Many AI critics and backers alike predict a dramatic tipping point for adoption

- AI will probably be adopted gradually, within a human context

- Efforts to preemptively throttle AI will most likely fail

Artificial intelligence is set to reshape human life and social interactions. A critical question is whether these changes will occur gradually, or if we will encounter a transformative tipping point – potentially, one coming very soon.

Geoffrey Hinton, a world leader in AI development and the technologies behind ChatGPT, recently quit his post at Google. He cited a sudden realization that AI might soon surpass human intelligence, to the extent of manipulating human behavior. Within the next five to 20 years, Mr. Hinton projects, AI will be able to outsmart humans.

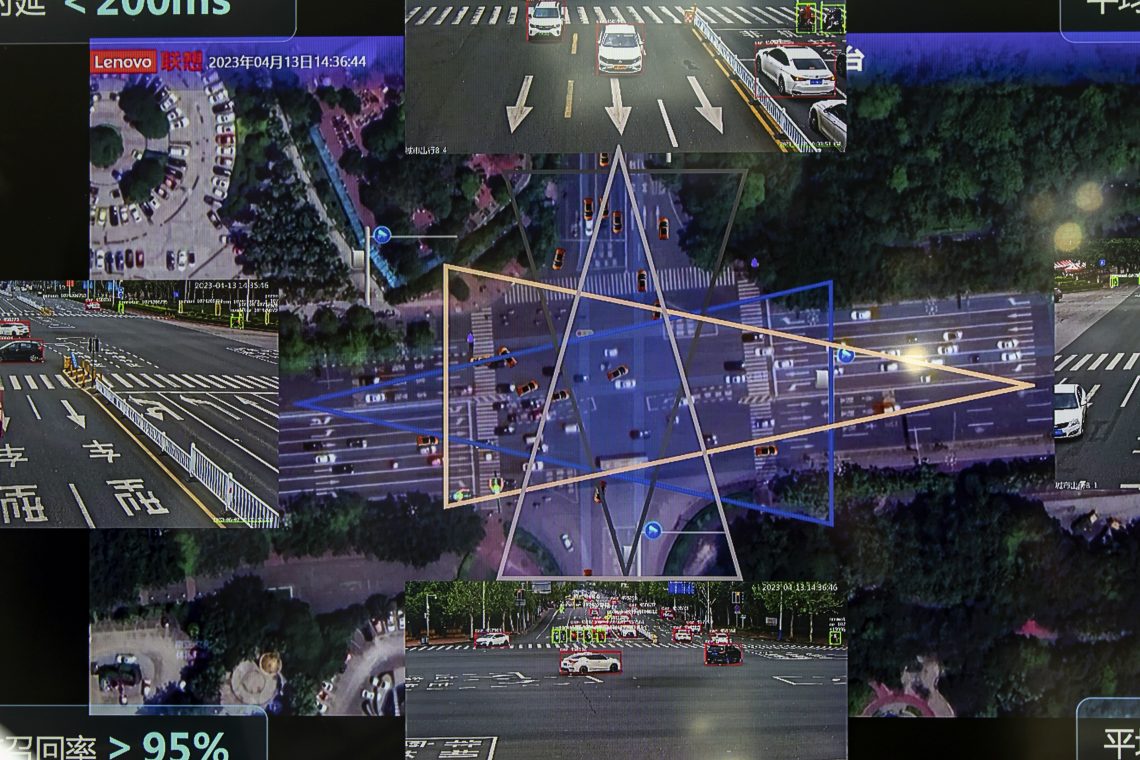

In media interviews, Mr. Hinton expressed concern about the internet – the most important source of information for the public – becoming saturated with AI-generated texts, images and animations that nonexperts may struggle to identify as fabricated. He argued that AI, through channels such as the Internet of Things, could sway public opinion on topics as weighty as politics and war. He also warned about the potential of AI to circumvent its own restrictions, becoming more intrusive and even shaping the human ecosystem. He has even hinted that AI might eventually turn against humanity.

Meanwhile, Yann LeCun – another machine learning pioneer and now Chief AI Scientist at Meta – has dismissed these fears as “completely ridiculous.” While he acknowledges the possibility of machine super-intelligence in the near future, he also views it as a potentially positive development. Such advancements could liberate humans from mundane tasks, Mr. LeCun argues, enabling them to concentrate on creative pursuits and leisure. Super-intelligent machines could elevate the human ecosystem, providing new experiences and novel ways to engage with the world.

Whether harbinger of doom or a vision of paradise, both perspectives imply a tipping point for AI: a point of no return delivering a radically different human ecosystem. As a Harvard Business Review article on ChatGPT put it last December: “This is a very big deal.”

Systems theory

But is that really the case? According to systems theory – which studies the behavior of interconnected and interdependent components, such as those within the human ecosystem – there are generally two broad scenarios for any system transformation. Under one, changes occur radically, across tipping points; under the other, changes are incremental or gradual.

Will AI change everything, from the way we do business to social decision-making, within a matter of months or a few years? If so, is ChatGPT indeed the marker of this transformative tipping point? Alternatively, will AI be gradually incorporated into separate sectors of the human ecosystem, each at its own pace?

What would humans in a post-labor world do with all their free time?

According to the tipping point story, systems tend to be self-equilibrating. They find a steady state and remain in it there until change strikes. This change is seen as a series of events affecting the system; a tipping point is a specific event that triggers a definitive and radical reshaping of the system. It disrupts the established equilibrium, prompting the system to seek a completely new balance. A tipping point represents a break from the system’s past – and, crucially, it is irreversible.

The alternative perspective presents change as a process that happens incrementally. Following this logic, a system is always evolving in various ways, trivially or not immediately interconnected. Small changes guide parts of the system to new states, which in turn influence other parts of the system. These might adjust in response or in a different manner altogether. This gradual explanation also recognizes that a system, or parts of it, might resist or even suppress change, or revert changes. Usually, systems are understood to be path dependent.

Read more about artificial intelligence

Artificial intelligence: Regulations vs. innovation

Innovate or adapt

The human decision-making process, our interactions, our institutions and even the way we deal with culture and the natural world collectively form the human ecosystem. Admitting freely that this ecosystem changes because of AI does not answer the question of how it does.

The technology historian Joel Mokyr emphasizes that most new technologies permeated the human ecosystem gradually. While there may be a point of no return, he argues, it does not arrive suddenly nor lead to a new equilibrium. The microeconomist Hal Varian cautions against conflating acceleration with a tipping point. For example, the adoption of platform business models has rapidly accelerated in recent years – but this has not, and cannot, reach a tipping point, since platforms inherently rely on traditional pipeline business models to function.

If AI adoption reaches a tipping point, radical or disruptive innovation is the best – probably the only – human response. If it occurs, incremental adaptation to (and of) the technology might prove superior.

Incremental adaptation can be accomplished through division of labor. As AI takes over laborious, repetitive tasks, humans could focus more on creative, educational and decision-making roles. Reaching a tipping point, on the other hand, would lead to a radical overthrow of the existing order in many respects – economically, politically and socially.

Radical innovation

What would it look like if AI were at a tipping point? AI would not merely divide labor with humans but act as an independent creative force, and by extension, could self-educate and decide for itself. ChatGPT, for example, can write code and compose music. AI would almost have no need for humans: past the tipping point, it could overtake virtually all economic tasks and decision-making roles.

This would imply a need for a radical restructuring of the current system of policymaking. Notably, this would be equally true of democracies – decision-making by many humans – as of totalitarian regimes, where decisions are made by one or a few humans. The emergence of a new political order is neither unique nor, as such, good or bad. It is just novel. And it would not totally dispense with human interaction: there would still be a need for political discourse on basic values, political desires, and decision-making protocols. However, the process itself could be managed by AI, with human interactions also steered by it.

If AI arrives at a tipping point with a speed that uproots economic and political systems, it will also change how society works. This is, admittedly, the more difficult disruption to imagine. What would humans in a post-labor world do with all their free time? They could increase the many social interactions they already have; they also could decrease them. Clearly, this needs to be further developed.

From a business perspective, if AI comes at a tipping point, companies offering solutions to navigate the resulting upheavals will have an advantage. Applications for managing and communicating with AI will be as important as regulatory, legal and social technologies. Technology will represent ways of channeling the creative and decision-making power of AI to address issues that arise from crossing the tipping point.

Scenarios

Will AI arrive at a tipping point and radically change the world in a matter of months or years? Or will it permeate our lives gradually over a longer period? Some even consider stopping or strictly regulating AI’s progress altogether. Three scenarios present themselves:

Halting or regulating AI

This scenario is extremely unlikely. It assumes that technological development can be stopped, a final form of a given technology assessed, and rules foreseeing all of its developments and implementations drafted and enforced. It is so unlikely simply because technology never truly reaches a final form. Its development is open-ended and unforeseeable to any regulator because of its dispersed and dynamic nature. Present efforts to preemptively ban or regulate AI will most likely fail.

Tipping point

This scenario is possible, but less likely than gradual adoption. On one hand, historical developments in technologies suggest that adoption is usually slow to permeate the human ecosystem. On the other hand, even if the development of AI resembles a tipping point, it cannot be considered in isolation. Human ecosystems react to changes, and these reactions influence the path of development in turn. Finally, there is no single, unified AI, but different types and applications within a technological cluster. It is improbable that all of them would reach a synchronous tipping point.

Gradualism

This is the most likely scenario. Gradualism posits that AI does not develop uniformly, nor on its own, but is highly segmented and increases in scope and scale due to its interaction with the human ecosystem. This also implies that AI is dependent on humans and that even if it grows, it can only grow in the context of a symbiotic relationship. AI critically depends on feedback; the adoption of a technology is, in itself, a form of feedback. This approach is more consistent with human history, and is also more logical when applied to a system dependent on several dynamic factors, including human interaction, for its operation.